Facebook Admits Mosque Shooting Video Was Viewed At Least 4000 Times – NPR

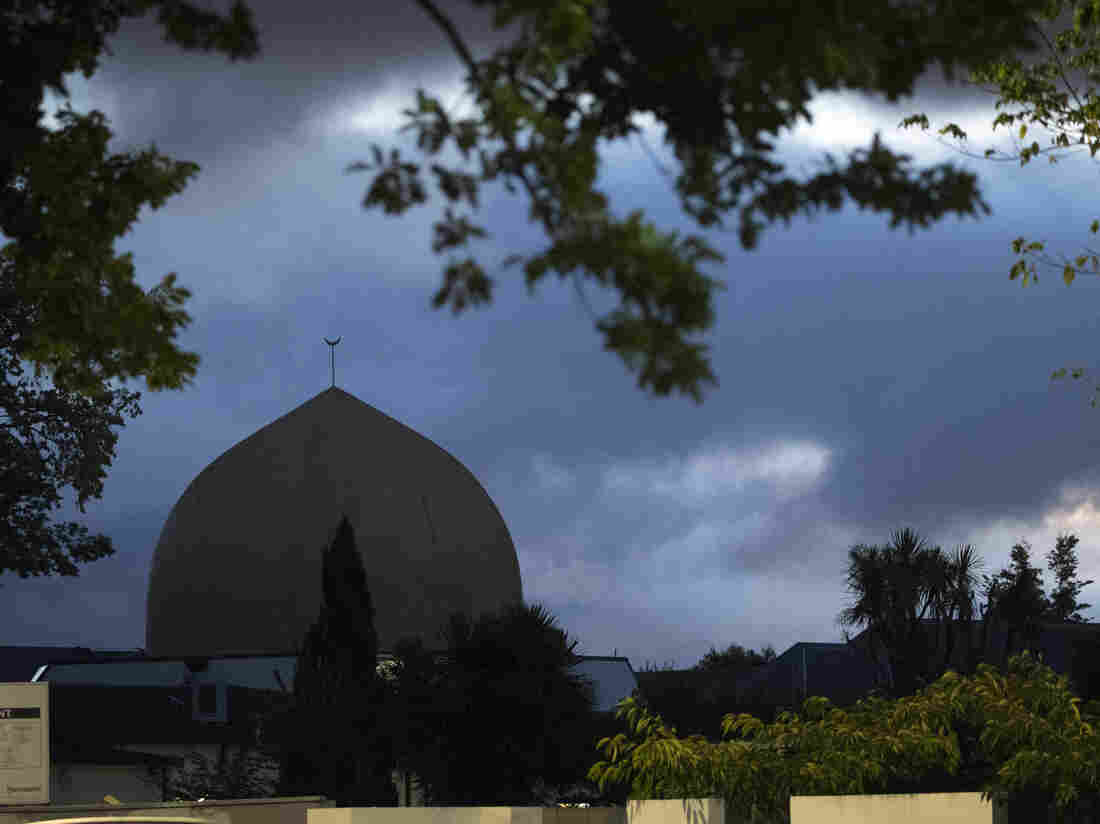

Al Noor mosque is shaded by clouds in Christchurch, New Zealand, on Tuesday.

Vincent Thian/AP

hide caption

toggle caption

Vincent Thian/AP

Al Noor mosque is shaded by clouds in Christchurch, New Zealand, on Tuesday.

Vincent Thian/AP

A Facebook vice president said fewer than 200 people saw the Christchurch massacre while it was being streamed live on the site. But the video was viewed about 4,000 times before Facebook removed it, he added. Countless more views occurred in the hours afterward, as copies of the video proliferated more quickly than online platforms like Facebook could remove them.

Social media and video sharing sites have faced criticism for being slow to respond to the first-ever live-streamed mass shooting, recorded from the first-person perspective of the shooter, the camera seemingly mounted atop the killer’s helmet. But executives from the sites say they have been doing what they can to combat the spread of the video, one possibly designed for an age of virality.

New Zealand Prime Minister Jacinda Ardern says she has been in contact with Facebook COO Sheryl Sandberg to ensure the video is entirely scrubbed from the platform.

And websites that continue to host footage of the attacks, such as 4chan and LiveLeak, are finding themselves blocked by the country’s major Internet access companies. “We’ve started temporarily blocking a number of sites that are hosting footage of Friday’s terrorist attack in Christchurch,” Telstra said on Twitter. “We understand this may inconvenience some legitimate users of these sites, but these are extreme circumstances and we feel this is the right thing to do.”

Facebook says that 12 minutes after the 17-minute livestream ended, a user reported the video to Facebook. By the time Facebook was able to remove it, the video had been viewed about 4,000 times on the platform, according to Chris Sonderby, the company’s vice president and deputy general counsel.

But before Facebook could remove the video, at least one person uploaded a copy to a file-sharing site and a link was posted to 8chan, a haven for right-wing extremists. Journalist Robert Evans told NPR’s Melissa Block that 8chan “is essentially the darkest, dankest corner of the Internet. It is basically a neo-Nazi gathering place. And its primary purpose is to radicalize more people into eventual acts of violent, far-right terror.”

Once the video was out in the wild, Facebook had to contend with other users trying to re-upload it to that site, or to Facebook-owned Instagram. Facebook’s systems automatically detected and removed the shares that were “visually similar” to the banned video, Sonderby said. Some variants of the video, like screen recordings, required the use of additional detection systems, such as those that identify similar audio.

Facebook says more than 1.2 million copies of the video were blocked at upload, “and were therefore prevented from being seen on our services.” Facebook removed another three hundred thousand copies of the video globally in the first 24 hours, it said. Another way to look at those numbers, reports TechCrunch, is that Facebook “failed to block 20%” of the copies when they were uploaded.

Other video sharing sites also found themselves coping with an enormous influx of uploads. “The volumes at which that content was being copied and then re-uploaded to our platform was unprecedented in nature,” Neal Mohan, chief product officer for YouTube, told NPR’s Ailsa Chang. During the first few hours, YouTube saw about one upload every second, he said. (Note: YouTube is among NPR’s financial sponsors.)

The New Zealand mosque shooting was the most disturbing video I have ever seen. I saw Little kids being shot in the head. I am a Muslim who lives in NZ. it could’ve been my little brother but I will not blame all of Australia for the action of one Australian, do you get me?

— عُمر (@OmarImranTweets) March 15, 2019

The difficulty in blocking or deleting the videos was exacerbated in that the video came in different forms, Mohan said. “We had to deal with not just the original video and its copies, but also all the permutations and combinations” — tens of thousands of those, he said.

The first-person viewpoint made for an unusual technical challenge for computers that had not been trained to detect videos from that perspective, Mohan told NPR. “Our algorithms are having to learn literally on the fly the second the incident happens without having the benefit of, you know, lots and lots of training data on which to have learned.”

New Zealand companies say they’re considering whether they want to be associated with social media sites that can’t effectively moderate content. “The events in Christchurch raise the question, if the site owners can target consumers with advertising in microseconds, why can’t the same technology be applied to prevent this kind of content being streamed live?” the Association of New Zealand Advertisers and the Commercial Communications Council said in a joint statement. “We challenge Facebook and other platform owners to immediately take steps to effectively moderate hate content before another tragedy can be streamed online.”

As online companies attempted to block the video using technology, the government of New Zealand is using more traditional methods to stop the spread of the video there. The country’s chief censor has deemed the video “objectionable,” making it illegal to share it within the country. An 18-year-old, not involved in the attack, was charged after he shared the video and posted a photo of one of the mosques along with the phrase “target acquired.”